Poster

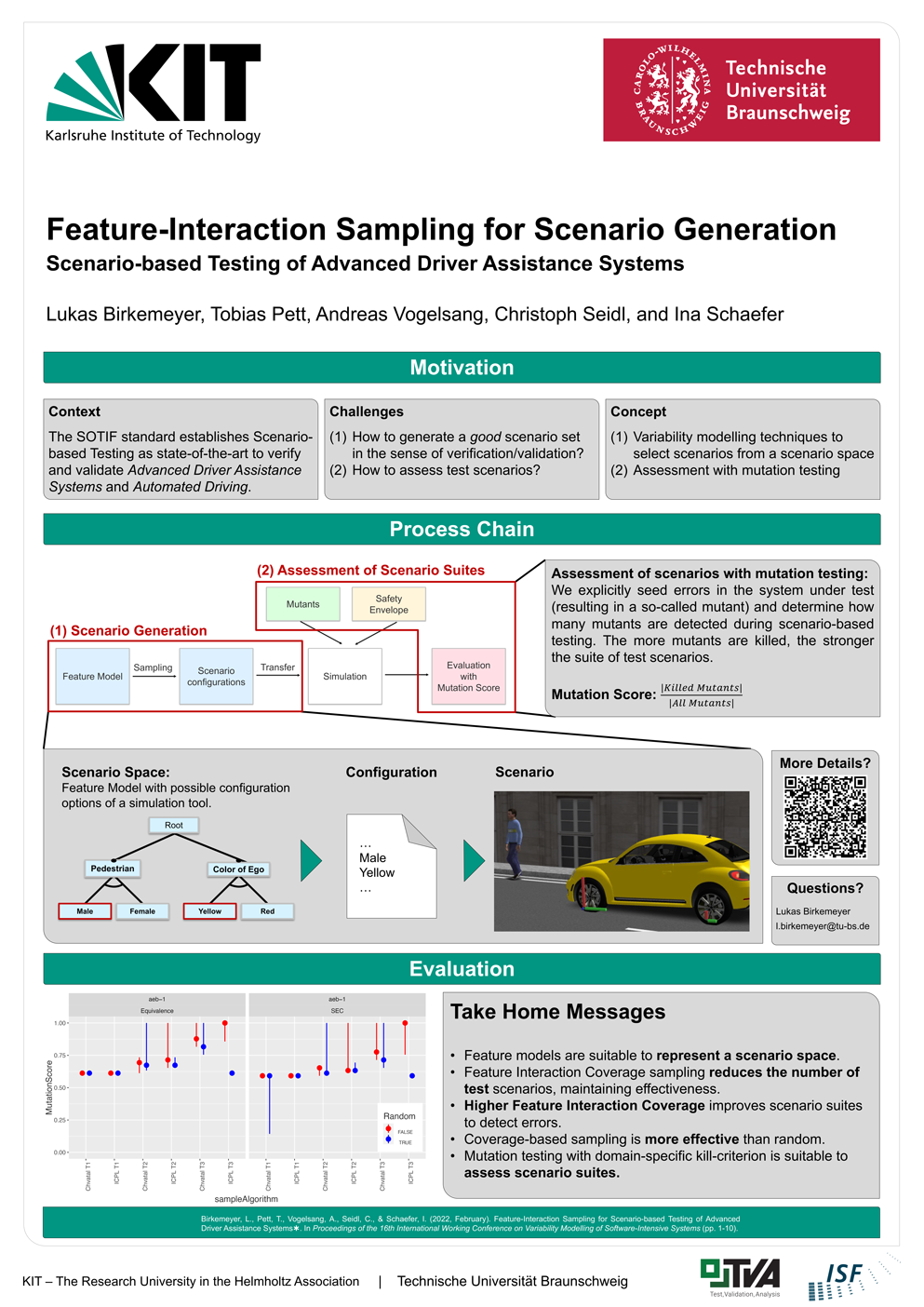

The dream of automated driving seems within reach and promises greater safety and comfort for road users. Nevertheless, the safeguarding of these automated systems prevents their market launch. State-of-the-art is the use of scenario-based testing according to the SOTIF standard (ISO 21448). However, this standard leaves two major challenges unresolved: concepts and processes for generating (1) and evaluating test scenarios (2). Our research addresses these same two open questions.

(1) We use a combinatorial approach to generate scenarios. Here, we understand a scenario as a configuration of a simulator and map the entire scenario space into a feature model. Using variability management techniques, we select a representative subset of scenarios from the entire scenario space.

(2) We adapt mutation testing to evaluate the generated scenarios. Mutation Testing is a method from computer science evaluates the quality of a test environment. Here, the so-called mutation score shows the ability of the test environment to find errors in the DUT. We use simulations to determine the mutation score for a scenario catalog and can thus evaluate the quality of this catalog.

The goal of our research is to develop generic methods that - measured by the mutation score - generate scenario catalogs with the highest possible quality and are thus suitable for validating automated driving functions and driver assistance systems.